Nov 12, 2025How Lightwheel and World Labs’ Marble point toward a scalable Real2Sim world pipeline for large-scale embodied AI evaluation

Generating the World Layer: How Lightwheel and World Labs Scale Robotics Evaluation

Overview

Scaling evaluation is one of the hardest unsolved problems in embodied AI.

As robot foundation models grow in capability, evaluation has become the limiting factor—not training. Measuring real progress requires testing across thousands of environments, tasks, and failure modes. Real-world testing alone cannot keep up: it is slow, expensive, difficult to reproduce, and fundamentally limited in coverage.

Simulation is the only viable path to scalable evaluation. Lightwheel leverages its physics-grounded Real2Sim pipeline to scale high-quality, physically accurate, and diverse SimReady assets. However, one critical component of simulation has remained a long-term challenge and bottleneck: world creation.

A scalable Real2Sim pipeline requires coverage across environments that reflect the complexity and diversity of real worlds—from homes and factories to retail and service environments. Neither manual reconstruction nor dense sensing can deliver this level of scale or fidelity.

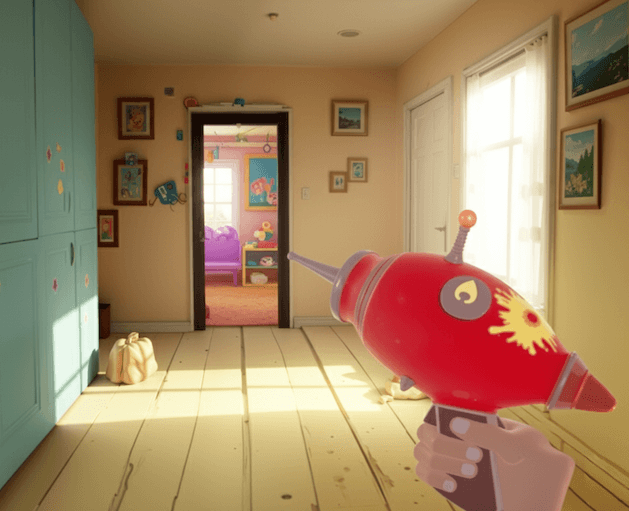

This case study highlights a series of Marble-powered demonstrations, developed in collaboration with Lightwheel, that explore how fast, low-cost environment generation can unlock environment scale for evaluation platforms like RoboFinals.

Across multiple demos and videos, the same core workflow is repeated:

lightweight real-world capture → Marble world generation → integration with Lightwheel SimReady assets → simulation → behavior execution → evaluation

Collaboration Overview: Lightwheel × Marble

Lightwheel is building simulation-centric infrastructure for physical AI, with a strong emphasis on evaluation at scale. Its platform spans world integration, physics-accurate SimReady assets, behavior execution, and standardized benchmarking through RoboFinals—treating evaluation not as a downstream task, but as a system-level capability that must scale alongside models.

Lightwheel approaches physical AI as an integrated system in which world generation, asset fidelity, behavior execution, and evaluation are tightly coupled. In this view, evaluation quality is fundamentally constrained by the quality of generated worlds. World generation quality itself depends on two independent dimensions:

- Physically accurate, interaction-ready assets

- Diverse, structurally representative environments

Without both, even the most sophisticated evaluation frameworks fail to produce reliable or comparable results.

Through EgoSuite, Lightwheel operates one of the largest egocentric human data pipelines in the field, delivering over 20,000 hours of real-world data weekly. This continuous access to real environments provides grounding and coverage, but it also exposes a core limitation: human-operated real-world data alone cannot scale evaluation.

A scalable Real2Sim environment pipeline is required to transform real observations into repeatable, controllable simulation environments.

Most current Real2Sim systems focus on high-fidelity digital twins created through dense sensing and heavy reconstruction. While visually impressive, these approaches are expensive, operationally complex, and poorly suited to the coverage and volume requirements of embodied AI.

Recent pioneering work from Stanford SVL introduced the concept of digital cousins—environments that are physically plausible and structurally representative, without being exact replicas. Lightwheel is among the first teams adopting this thesis, shifting environment creation from exact reconstruction toward scalable approximation and, ultimately, scalable evaluation.

This positioning made Lightwheel an ideal partner to explore Marble’s core capability: turning minimal real-world input into simulation-ready worlds quickly and repeatedly.

A Shared Workflow: From Lightweight Capture to Sim-Ready Worlds

Rather than focusing on a single environment or task, this collaboration explored a generalizable workflow applicable across many scenarios.

World Labs’ Marble makes it possible to drastically reduce the cost and time required to generate large numbers of environments, avoiding prohibitively labor-intensive dense scanning rigs or manual reconstruction.

All demonstrations presented here followed the same principles:

- Lightweight capture, such as a single 360° image

- Marble’s Gaussian Splat–based world generation

- Lightwheel’s SimReady assets

- Conversion into formats compatible with modern robotics simulators

Rather than producing perfect digital twins, Marble generates physically plausible digital cousins—environments designed for speed, coverage, and immediate use in evaluation.

How Marble Fits into the Workflow

Marble serves as the critical world-generation engine within Lightwheel’s broader world–data–evaluation infrastructure.

Across all demonstrations, Marble provides a fast and scalable way to convert lightweight real-world inputs into simulation-ready environments that can be systematically integrated, exercised, and evaluated at scale.

World Generation with Marble

Each demo begins with a minimal real-world capture, such as a single consumer-grade 360° image or a small set of casually captured photos, requiring no dense scanning rigs, specialized sensors, or manual reconstruction. Marble processes this input to generate a navigable 3D Gaussian Splat world, capturing layout, geometry, lighting, and depth.

These worlds provide the spatial structure required for robot navigation and perception, while reducing environment creation time from weeks to minutes. This makes it practical and economically viable to generate many worlds rather than carefully curating a few.

Simulation Integration

Once generated, Marble worlds are exported and converted to USD using NVIDIA Omniverse NuRec, then imported into NVIDIA Isaac Sim.

Within Isaac Sim, Lightwheel combines its physically accurate SimReady assets with the Marble-generated scenes. The environments are further augmented with:

- Robots and sensors

- Task definitions

- Evaluation hooks

Each Marble-generated world becomes an active simulation environment, capable of supporting behavior execution and benchmarking.

From Worlds to Behaviors to Evaluation

With Marble enabling scalable world generation, Lightwheel was able to run a closed-loop workflow across all demonstrations.

Behavior Execution

Robots can be trained or teleoperated across many Marble-generated environments using consistent task definitions. This allows behaviors to be stress-tested against environmental variation, rather than tuned to a small number of fixed scenes.

Evaluation (RoboFinals)

The same Marble worlds are reused for standardized evaluation:

- Measuring robustness under layout and appearance variation

- Comparing model performance across shared world distributions

- Identifying failure modes that only emerge at scale

Because Marble worlds are fast to generate, evaluation scenarios can evolve alongside models, instead of becoming static benchmarks.

Technical Workflow

Across all demonstrations, the collaboration validated a clean, repeatable Real2Sim pipeline built around Marble:

- Capture a lightweight real-world input (e.g., a 360° image)

- Generate a 3D Gaussian Splat world in Marble

- Export splat data

- Convert to USD using Omniverse NuRec

- Import into NVIDIA Isaac Sim

- Combine with Lightwheel SimReady assets

- Add robots, assets, tasks, and evaluation logic

This workflow is now documented in an NVIDIA technical guide, enabling other robotics teams to adopt the same Marble-based approach.

Results & Impact

Using Marble as the world-generation backbone across multiple demonstrations produced clear gains:

- Massive speedup: environment creation reduced from weeks to minutes

- Better data scalability: feasible to generate many worlds instead of a handful

- Lower cost: no dense scanning rigs or heavy reconstruction pipelines

- Evaluation-ready worlds: scenes usable immediately for simulation and benchmarking

Most importantly, Marble shifted the core evaluation constraint from:

“How do we build enough environments?” to “How do we design better evaluation tasks?”

Why This Matters

Evaluation is key to the success of robotic development, but it can only scale if evaluation environments scale with it.

Marble enables a different tradeoff than traditional Real2Sim pipelines by:

- Prioritizing coverage and iteration over perfect reconstruction

- Favoring digital cousins over costly digital twins

- Making real-world grounding cheap, fast, and repeatable

For Lightwheel, Marble strengthens the World Layer, enabling RoboFinals to function as a repeatable, environment-rich evaluation framework.

For Marble, these demonstrations collectively showcase a powerful capability: turning minimal real-world input into simulation-ready worlds that unlock evaluation at scale.

Together, this collaboration demonstrates how World Labs’ Marble can plug into a broader simulation and evaluation infrastructure, enabling scalable, repeatable measurement of physical AI progress.

Looking Ahead

This is just the beginning. Future demonstrations will build on this shared workflow by:

- Generating larger and more varied environments

- Introducing semantic and structural control over generated worlds

- Expanding beyond indoor scenes to more complex evaluation settings

As embodied AI systems grow, the ability to generate worlds as fast as models are trained will become essential.

These demonstrations show how Marble makes that future practical by accelerating the most constrained part of the pipeline: world creation.

Read More

Nov 12, 2025

Scaling Robotic Simulation with Marble

How researchers are using Marble’s generative worlds to accelerate robot training, testing, and real-to-sim transfer.

Nov 12, 2025

Splat World: Exploring New Dimensions of Gaussian Splatting in VR

How one developer turned Gaussian splats into a new language for building interactive worlds.